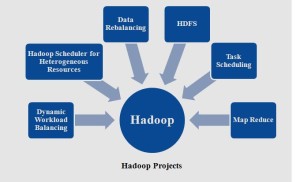

HADOOP PROJECTS for students

Hadoop projects for students is based on data mining and cloud computing domain our main aim of hadoop project is to enhance scalable factor in big data application we define big data as collection of information from various sources to create a global data. In cloud computing it gather various services from cloud service provider and to store cloud user data in specified location. Data mining is also collect various source of information to satisfy user request. We develop hadoop application for M.Tech students to split all data into multiple chunks and sent to mappers. We distribute these map function as small piece data into multiple nodes. By job tracker & task tracker we monitor data flow and reducers store all information in HDFS.

Dynamic workload balancing in hadoop:

We provide hadoop with HDFS & map reduce function component. We determine hadoop distributed file system to store all hadoop user data. We obtain hadoop map reduce information from log file which enhance server node workload. We propose dynamic workload balancing algorithm in IEEE hadoop academic projects which move task from busiest worker to another worker and reduce job execution time. We provide cloudsim tool to simulate dynamic work load balancing algorithm performance.

Self adaptive hadoop scheduler for heterogeneous resources:

We use hadoop to process large data. We easily adopt self adaptive hadoop scheduler with various nodes based on capabilities. We support heterogeneous data by self adaptive scheduler to control each node capacity & no of task processed in each node at a time. We provide scheduler with elastic parameter to hadoop environment which extend & shrink node capacity depend on available resources.

Dynamic data rebalancing in hadoop environment:

We perform clustering operation and data in hadoop cluster to divide into number of blocks. We replicate data based on replication factor which increased data copy means storing data service time number in HDFS also increased. To overcome data replication problem in big data application we use dynamic data rebalancing algorithm with hadoop framework.

HDFS file system in hadoop projects:

Hadoop distributed file system is an important role in hadoop projects which enable data intensive, distributed and parallel application by Google map reduce framework. We store all map reduce data in hadoop distributed file system we store file as block series and replicate for fault tolerance in HDFS.hadoop projects for students

Data locality for virtualized hadoop based on task scheduling approach:

We handle major problem as cluster management & fluctuation resource utilization in cloud virtual machine various scheduling algorithm are implemented which does not retained high performance in two level distributed data in virtual machine & physical machine. We implement weighted round robin algorithm by projects team to improve data locality for virtualized hadoop cluster.hadoop projects for students

Distributed hadoop map reduce:

We developed more than 75+ projects in hadoop with various techniques in an efficient way for grid based application. We provide map reduce a data processing platform. We determine hadoop on grid which differ from normal map reduce framework ensure free, dynamic & elastic map reduce grid environment.

Related Pages

- Computer network security projects

- IEEE Wireless sensor network PROJECTS

- B.TECH IEEE PROJECTS 2016

- M.Tech IEEE Projects 2016

- IEEE ANDROID PROJECTS

- Wireless sensor network ns2 code

- Cloud computing IEEE project papers

- IEEE papers on computer science

- FINAL YEAR PROJECT FOR CSE

- CSE projects for final year

- Projects for CSE final year students

- IEEE xplore projects 2016 for IT

- 2016 IEEE projects for cse

- CSE projects 2016

- IEEE 2016 projects for cse

- IEEE projects 2016 for cse

- IEEE projects for cse 2016

- Computer science engineering projects

- Projects for computer science students

- Computer science project topics 2015

- Project for computer science

- Computer engineering projects

- Projects for computer science

- computer science project topics

- Projects for it students

- IT projects for students

- final year projects for CSE topics

- IEEE cloud computing projects 2016

- IEEE cloud projects 2016

- Cloud computing projects 2016

- Cloud computing IEEE projects 2016

- IEEE projects on cloud computing 2016

- Cloud computing projects for final year

- Final year computer science projects

- Software Projects for Engineering Students

- Software engineering projects for students

- Final year project ideas for software engineering

- IEEE Projects in Networking

- IEEE Project for CSE

- CSE Software Project Topics

- IEEE Project Topics for Computer Science

- IEEE Projects for Computer Science

- IEEE Software Projects

- IEEE Projects for IT

- Qualnet Simulation Projects

- Standard NLP Projects

- Qualnet Projects

- Projects For CSE

- PHD Computer Science Projects

- Peersim Projects

- Optisystem Projects

- Opnet Projects

- OMNET++ Projects

- NS3 Projects

- NS2 Projects

- Network Simulator Projects

- Networking Projects For Final Year Students

- Multimedia Based Projects

- MS Computer Engineering Projects

- Mininet Projects

- M.E CSE Projects

- Knowledge & Data Mining Projects In Java

- IEEE Projects On Cloud Computing

- IEEE Projects For CSE

- IEEE OMNET++ Projects

- IEEE NS3 Projects

- IEEE NS2 Simulation Projects

- IEEE NS2 Projects

- HADOOP PROJECTS for students

- IEEE CSE Projects

- IEEE Computer Communication Projects

- IEEE Communication Projects

- Grid Computing Projects

- CSE Final Year Projects

- IEEE PHD Projects

- PHD CSE Projects

- MATLAB Image Processing Projects

- Projects On Cloudsim

- Projects In Cloud Computing

- Project Topics On Cloud Computing

- NS2 Code For TCP

- IEEE Projects On Wireless Communication

- IEEE Projects On Networking

- IEEE Projects On Network Security

- IEEE Projects On Data Mining

- IEEE Projects For Information Technology

- Ns2 Projects code

- IEEE Networking Projects

- IEEE Matlab Projects

- IEEE Java Projects

- IEEE Hadoop Projects

- IEEE Dotnet Projects

- IEEE Computer Science Projects

- IEEE Cloudsim Projects

- Cloud Projects For Students

- Cloud Computing Projects For cse Students

- Cloud Computing Projects For Engineering Students

- Cloud Computing Project For Students

- Cloud Computing Based Projects

- Cloud Based Projects For Engineering Students

- IEEE Projects CSE